We believe that current performance metrics like sensitivity, specificity, and ROC curve do not fully capture the clinical usefulness of models. Performance should be assessed by the impact on patient care and outcomes, not just predictive or generative accuracy.

Successful deployment of AI models must consider constraints such as clinician capacity, staff availability, EHR data, and the ability to influence social determinants of health. They should also be evaluated more holistically to quantify the net benefit of using a model, considering the costs and benefits of potential actions.

To address this need, we are supporting the Assurance Labs to utilize a rigorous framework for evaluating ML models. This framework goes beyond mathematical metrics and assesses models practically on real-world health data, to determine whether they are truly safe and effective for use in healthcare. Critically, this evaluation process will ensure the Assurance Labs themselves are trustworthy enough to be used by private sector technology vendors. A vendor’s report card issued by a CHAI-certified Assurance Lab can then serve as independent validation of a model’s claims for indications, fostering trust between users and vendors.

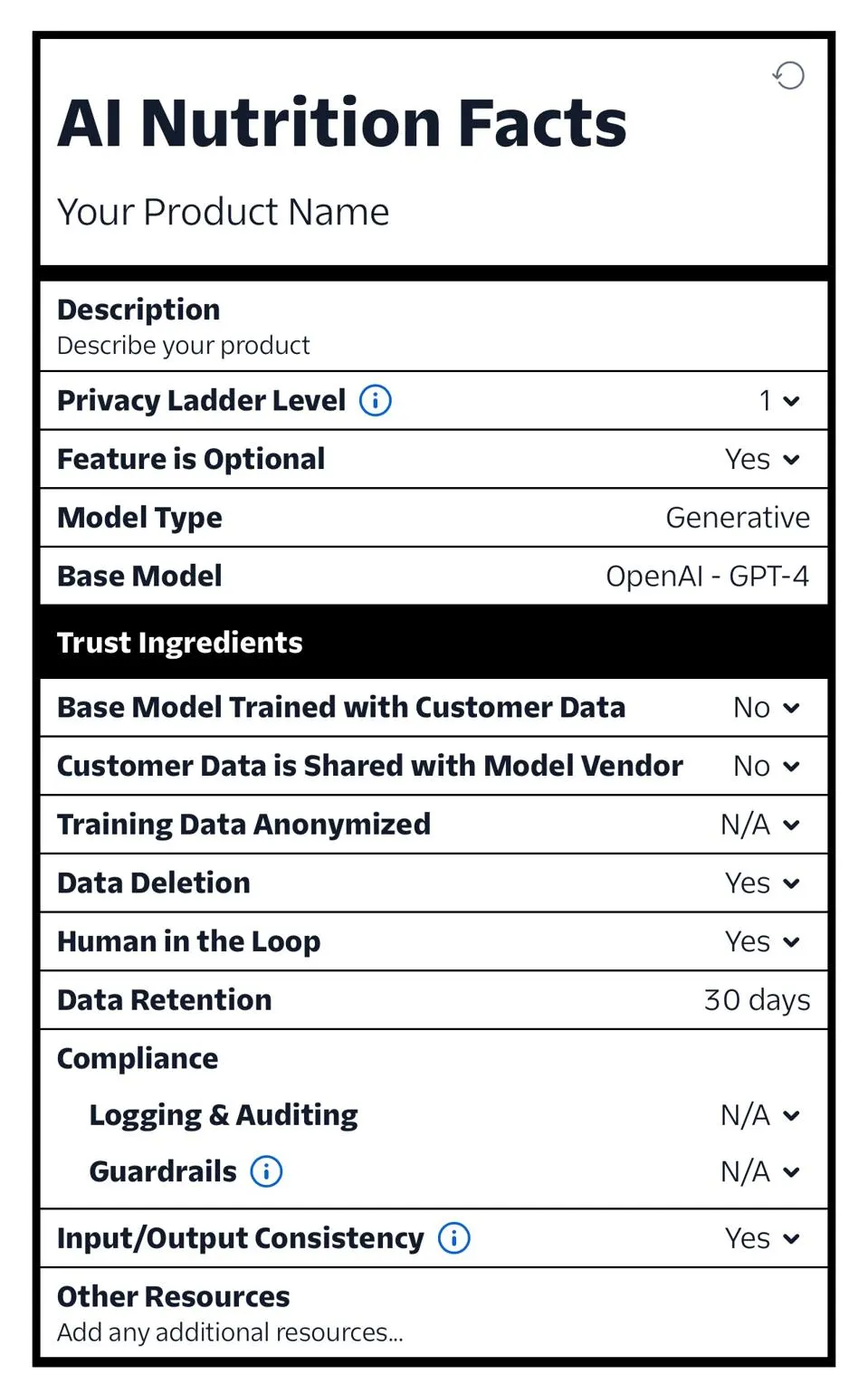

Acting as a ‘nutrition label’ of sorts, these model report cards will act as a publicly available tool for greater transparency for the community, users, and end recipients of any algorithm to increase trust and accountability.

Read more on HT-1’s rule from ONC and OCR’s final rule on Sec 1557 (here’s an online review connecting OCR and HT-1) for more information on HHS’ federal agencies and how we will be dovetailing their policies.